FITEVAL

FITEVAL is program for objective assessment of model goodness-of-fit with statistical significance based on Ritter and Muñoz-Carpena (2013, 2020).

- Download FITEVAL GUI Installer for: Windows [1Mb] or macOS [1.7Mb]. Please see installation and troubleshooting instructions here.

- Download FITEVAL for Matlab [272Mb]

- Download FITEVAL example inputs [6kb]

- Library requirements (see below)

Click on the tabs below for the GUI or MatLab program documentation.

Program description

FITEVAL is a software tool for standardized model evaluation that incorporates data and model uncertainty following the procedures presented in Ritter and Muñoz-Carpena (2013, 2020). The tool is implemented in MATLAB® and is available free of charge as a computer application (MS-Windows® and macOS®) or as a MATLAB function. Among other statistics, FITEVAL includes the quantification of model prediction error (in units of the output) as the root mean squared error (RMSE); and the computation of the Nash and Sutcliffe (1970) coefficient of efficiency (NSE) and Kling–Gupta (2009) efficiency (KGE) as dimensionless goodness-of-fit indicators. FITEVAL uses the general formulation of the coefficient of efficiency Ej, which allows modelers for computing modified versions of this indicator,

where Bi is a benchmark series, which may be a single number (such as the mean of observations), seasonally varying values (such as seasonal means), or predicted benchmark values using a function of other variables. For j=2 and Bi=Ō, Ej yields de classical E2=NSE. The code is flexible to be used with other model efficiency threshold values than those proposed, or for calculating Legates and McCabe (1999) modified form of the coefficient of efficiency (E1) instead of NSE. Notice that using transformed series in the corresponding ASCII text input file, such as {√Oi,√Pi} , {ln(Oi + ε), ln(Pi + ε)} or {1/(Oi+ ε), 1/(Pi + ε)}, the program computes NSE (and RMSE) applied on root squared, log and inverse transformed values, respectively (Le Moine, 2008; Oudin et al., 2006).

Hypothesis testing of NSE exceeding a threshold value is performed based on obtaining the approximated probability density function for Ej by bootstrapping (Efron and Tibshirani, 1993) or by block bootstrapping (Politis and Romano, 1994) in the case of time series (non independent autocorrelated values). In order to rate the model performance based on NSE (i.e. E2), the following threshold values are used for delimiting the model efficiency classes or "pedigree": NSE<0.65 (Unsatisfactory), 0.65≤NSE<0.80 (Acceptable), 0.80≤NSE<0.90 (Good) and NSE≥0.90 (Very good). If other model efficiency thresholds for particular applications could be justified, these can be changed in FITEVAL (see Configuration options). Statistically accepting model performance (i.e., when the null hypothesis NSE<0.65 is rejected) implies that the one-tailed p-value is below the considered significance level α. This p-value quantifies the strength of agreement with the null hypothesis. Thereby, for p-value >α, the model goodness-of-fit should be considered Unsatisfactory. Usual values of α are 0.1, 0.05 or 0.01 and its choice will depend on the context of the research carried out. That is, how strong the evidence must be to accept or reject the null hypothesis (NSE< 0.65). As a starting point, the least restrictive significance level (α= 0.10) can be adopted (Ritter and Muñoz-Carpena, 2013). In addition, the guidance on model interpretation and evaluation considering intended model uses provided by Harmel et al. (2014) can be taken into consideration.

For realistic model evaluation, consideration of uncertainty associated with the observations and the model predictions is critical. Two methods to account for measurement and/or model uncertainties are studied and implemented within FITEVAL: a) Probable Error Range, PER) that has the advantage that is non-parametric, but can yield excessive model performance increases; and b) Correction Factor (CF) provides more realistic modification of the deviation term used in the goodness-of-fit indicators and allows for model uncertainty, but it requires assumptions about the probability distribution of the uncertainty about the data and/or the model predictions. FITEVAL provides modelers with an easy to use tool to conduct model performance evaluation accounting for data and prediction uncertainty in a simple and quick procedure.

FITEVAL GUI Program Installation

FITEVAL GUI stand-alone can be installed both for Windows and macOS. It requires the Matlab Runtime libraries. These are installed automatically by running the installer. The installer can be found in the installation ZIP package downloaded from the links above.

After installation, move the "Examples" directory found in the ZIP package to your working directory in your PC. The examples contained within will be used in the documentation below.

Troubleshooting macOS GUI installation:

- The installer will advise that "the program cannot be opened because it is from and identified developer". By default, Mac OS only allows users to install applications from 'verified sources.' In effect, this means that users are unable to install most applications downloaded from the internet or stored on physical media without receiving this error message. To bypass this error follow these steps: a) Open the System Preferences, this can be done by either clicking on the System Preferences icon in the Dock or by going to Apple Menu > System Preferences; b) Open the Security & Privacy pane by clicking Security & Privacy, make sure that the General tab is selected. Click the icon labeled Click the lock to make changes; c) At the bottom of the Security & Privacy > General pane you will see the message that the "app was blocked because it is not from an identified developer". Click on "Open anyway" to continue the installation. For more information about this message, please visit Apple's KB article on the topic http://support.apple.com/kb/HT5290.

Troubleshooting Windows GUI installation:

- The installer might throw a proxy error. This happens when either anti-virus or network configuration is restricting access to download ports. The installer needs to install the MCR (Matlab Runtime Environment). If you run into trouble, please use a VPN and try again, or contact your system administrator.

- After installation, if when starting the application for the first time it says that the MCR is not found, restart the machine. Sometimes the installer does not refresh the MCR paths.

- When starting the program it needs to be “Run as administrator”. This can be permanently configured by clicking the application icon with the right-hand-side mouse bottom and under the “Shortcut” tab selecting “Advanced” and inside there click "run as administrator”.

- For installation in virtual machines or shared computers, make sure that the user has a local administrator profile.

- The first time the program is run, after loading one of the input files (see examples below) it will ask you to save the configuration file, just press “Done” and run again.

- When running a case, the program might show an "Error at code line ____: Invalid file identifier. Use fopen to generate a valid identifier". This error is related to the item identified above whereby the program needs to be run with administrator priviledges. Please follow the instructions above to run as administrator.

Program Use & Output

Starting the program GUI

After starting the program (click on app icon), a file explorer window will appear to select the working directory for the model evaluation. This directory cannot be write protected (i.e. Program Files in Windows or Applications in mac, etc.). After selecting the working directory the program GUI opens as shown below.

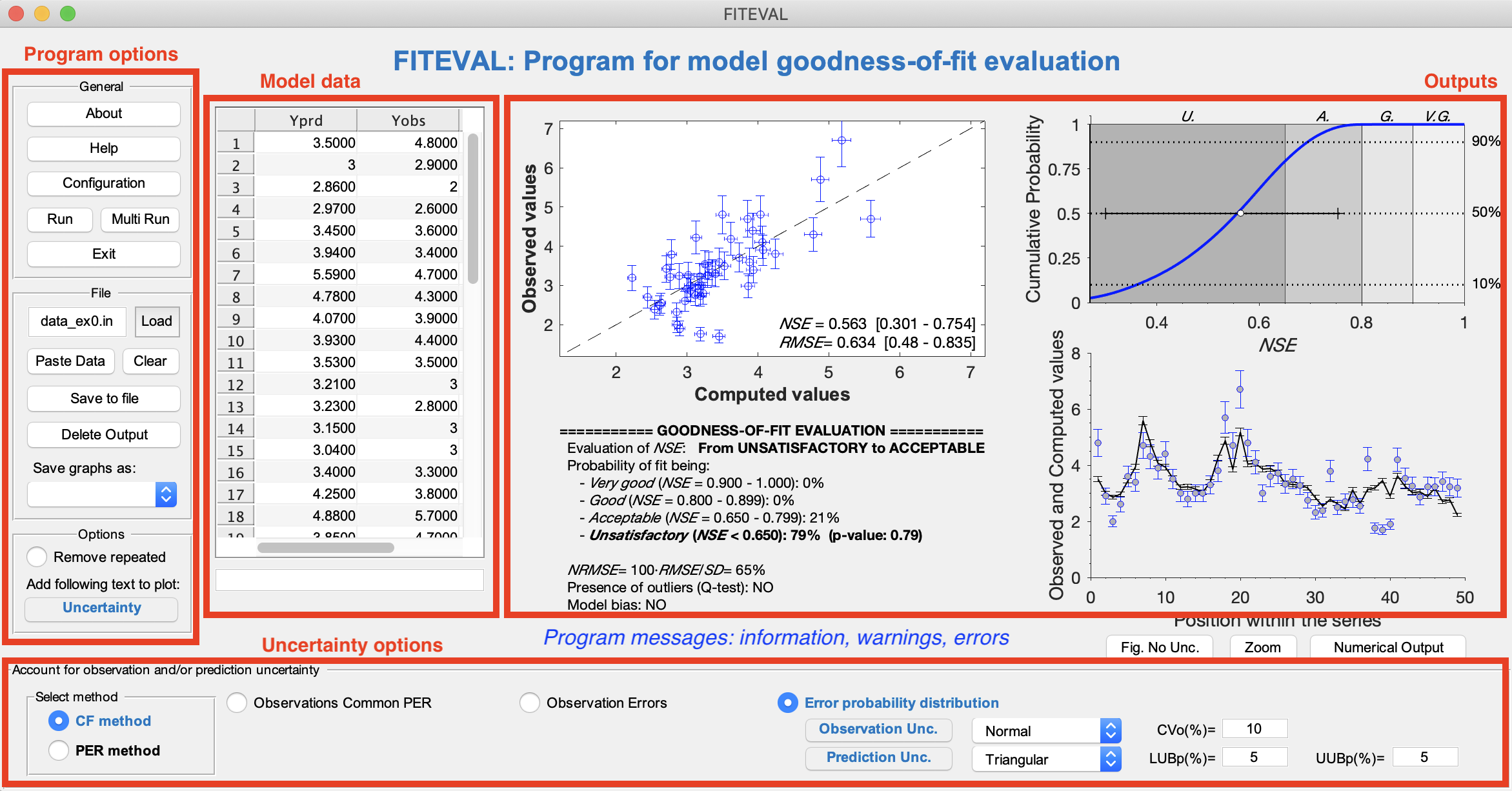

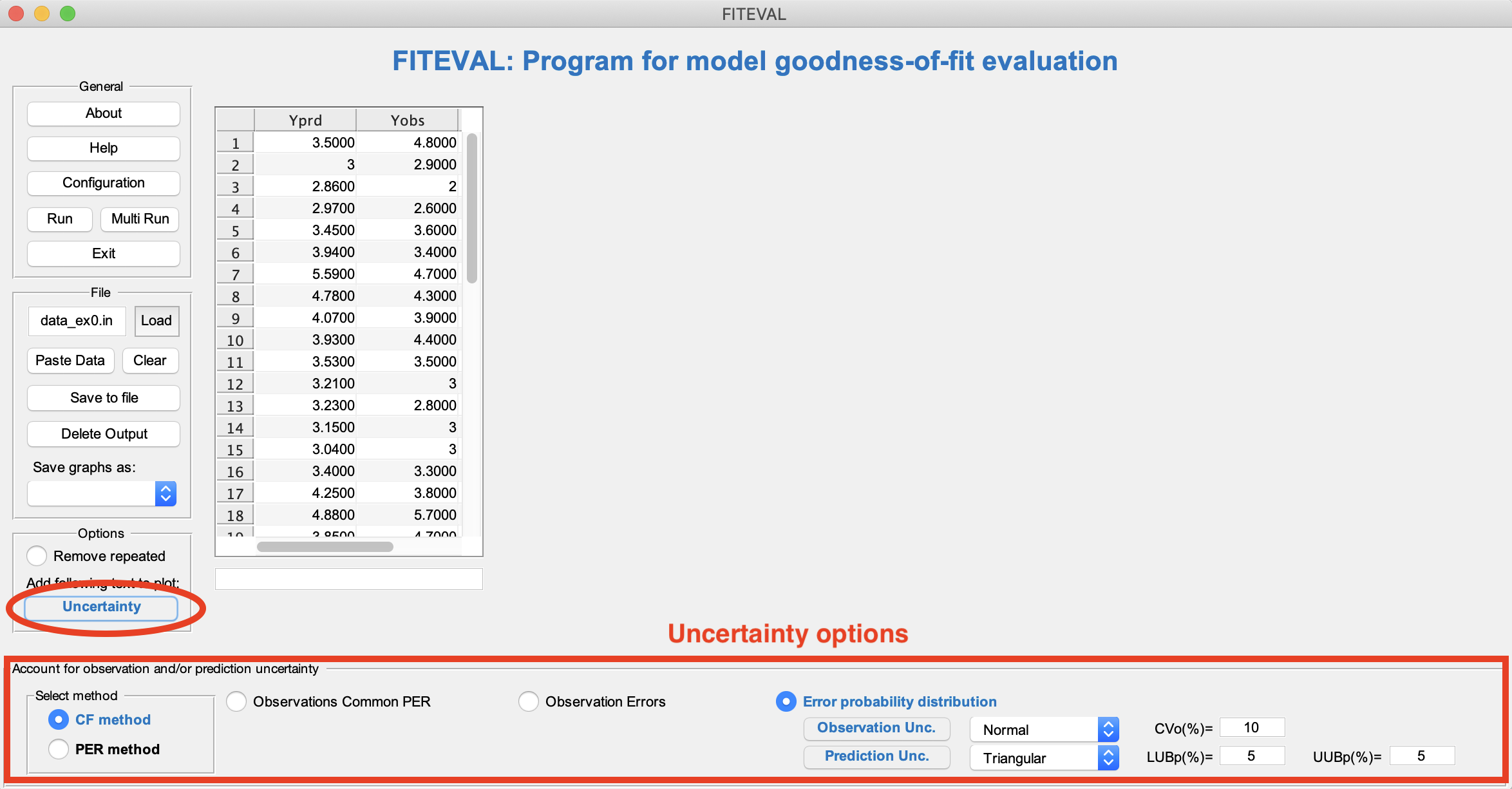

The program GUI is divided into the workspaces shown in the image below (outlines and red labels). The main program options are laid out on the left of the GUI, where the model evaluation data is shown next to it, and the outputs to the right. Specific options to incorporate uncertainty in the evaluation are presented at the bottom (only visible when the Uncertainty option is selected). Note the information messages in blue that will show at times during the different program actions. Details on the options and the workspaces are provided in the sections below.

Input data file description

A user supplied input ASCII data file (with extension ".in") is required and must be located in a working directory selected by the user. In this documentation we will use the "Example" directory provided in the installation package (see note at the end of the previous section). The input data file (*.in) must contain at least two paired vectors or columns,

{observations, predictions [...]}

The input file may contain missing values that must be denoted as "nan". The program can handle many additional options (comparisons with benchmark data, uncertainty in observed values, uncertainty in the simulated results and combinations of these) by specifying additional columns in the data input file. See the help file (UnOptions_help_mr.pdf), and corresponding input file examples provided in the distribution directory.Outputs description

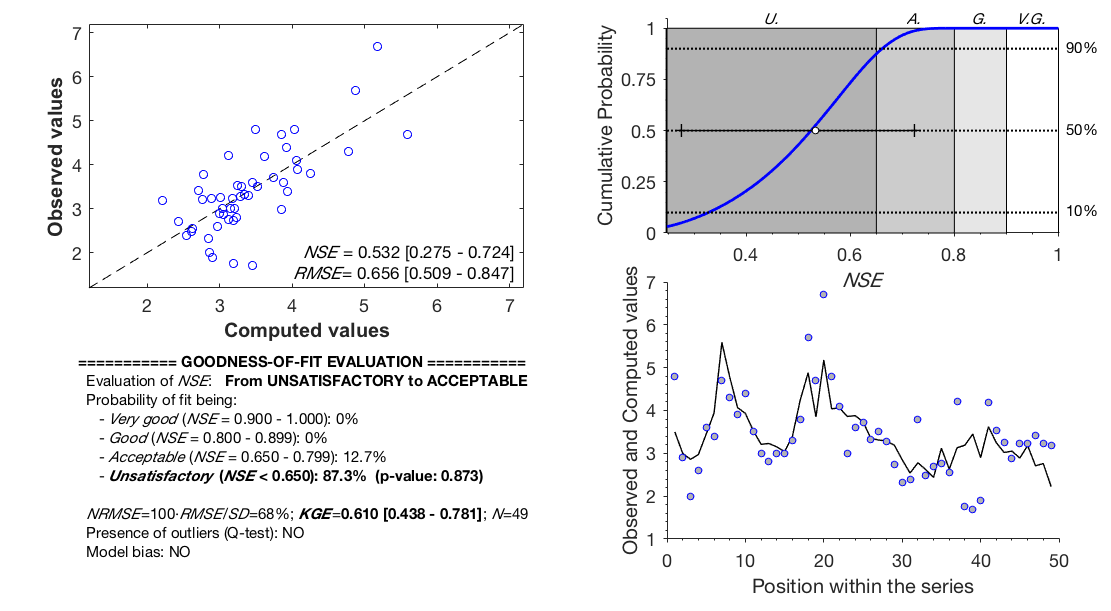

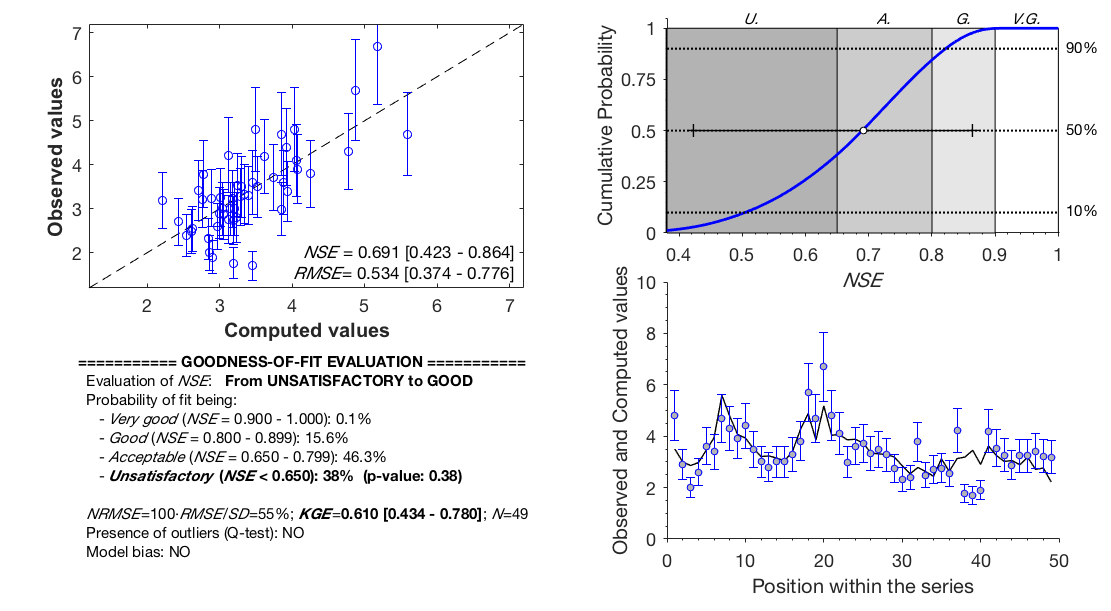

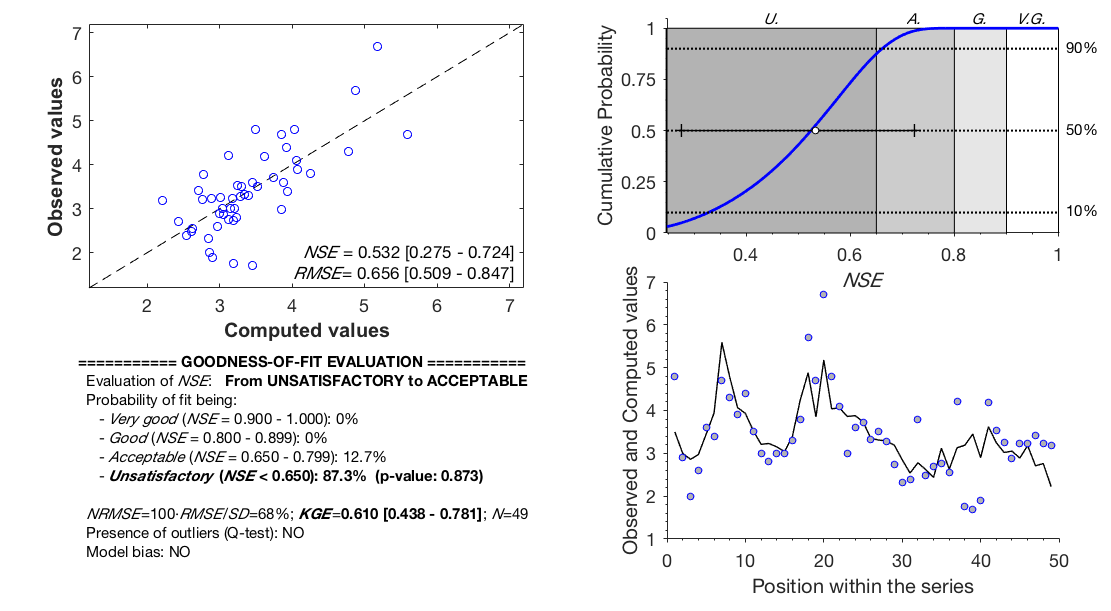

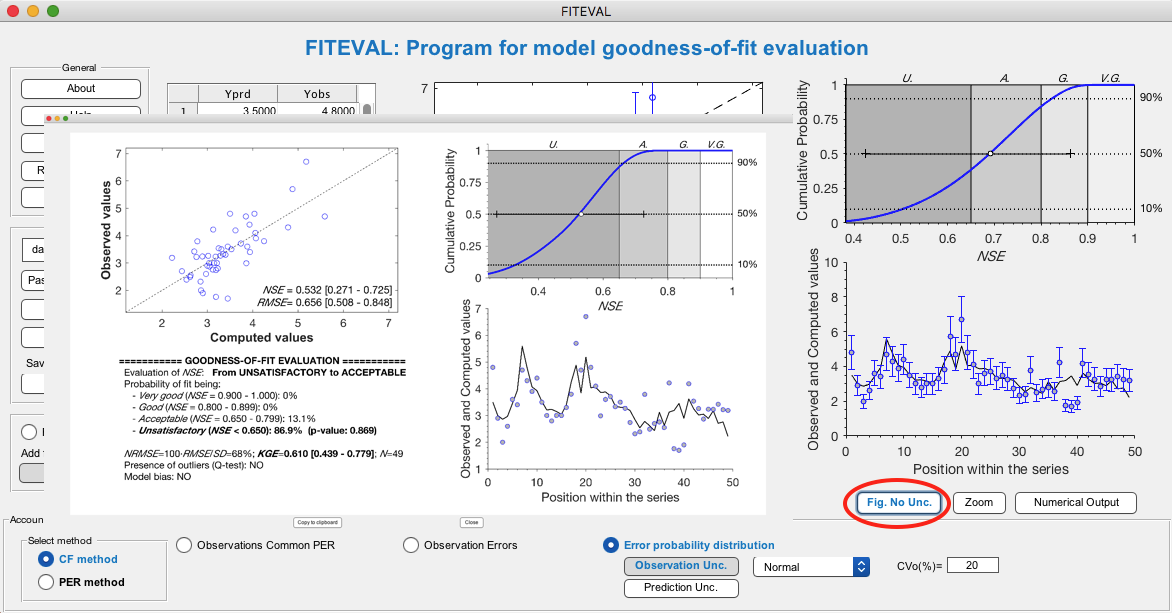

After running the evaluation case, the program presents a summary of statistics and a composite figure with the graphical assessment. The goodness-of-fit evaluation results are also provided in a portable data file (pdf) and a portable network graphics file (png) with the same name as the input data file, written to the same working directory where the .in file is located. The screen output and pdf files contain the following information:

a) plot of observed vs. model computed values illustrating the match on the 1:1 line (line of perfect agreement);

b) calculation of NSE and RMSE and their corresponding 95% confidence intervals;

c) plot of the approximated NSE cumulative probability function superimposed on the NSE "pedigree" regions;

d) plot illustrating the series of the observed and computed values;

e) qualitative goodness-of-fit interpretation based on the model "pedigree" classes (Acceptable, Good and Very Good);

f) p-value representing the probability of wrongly accepting the fit (NSE≥ NSEthreshold=0.65). For p-value >α, the model fit is considered Unsatisfactory;

g) model bias when it exceeds a given threshold (>5% by default);

h) possible presence of outliers;

i) normalized root mean squared error NRMSE (in %)= 100·RMSE/(standard deviation of the observations);

j) calculation of Kling–Gupta efficiency (KGE) and corresponding 95% confidence interval.The 1:1 and series plots help to visually inspect the similarity degree of the two series, and detecting which observations are best or worst predicted by the model. When uncertainty is incorporated in the evaluation, the resulting 1:1 plot shows the uncertainty boundaries as vertical (measurement uncertainty) or horizontal (model uncertainty) error bars, whereas the series plot shows both as vertical error bars. Additionally, the numerical output is stored in a ASCII text file with the same name as the input data file and extension '.out'.

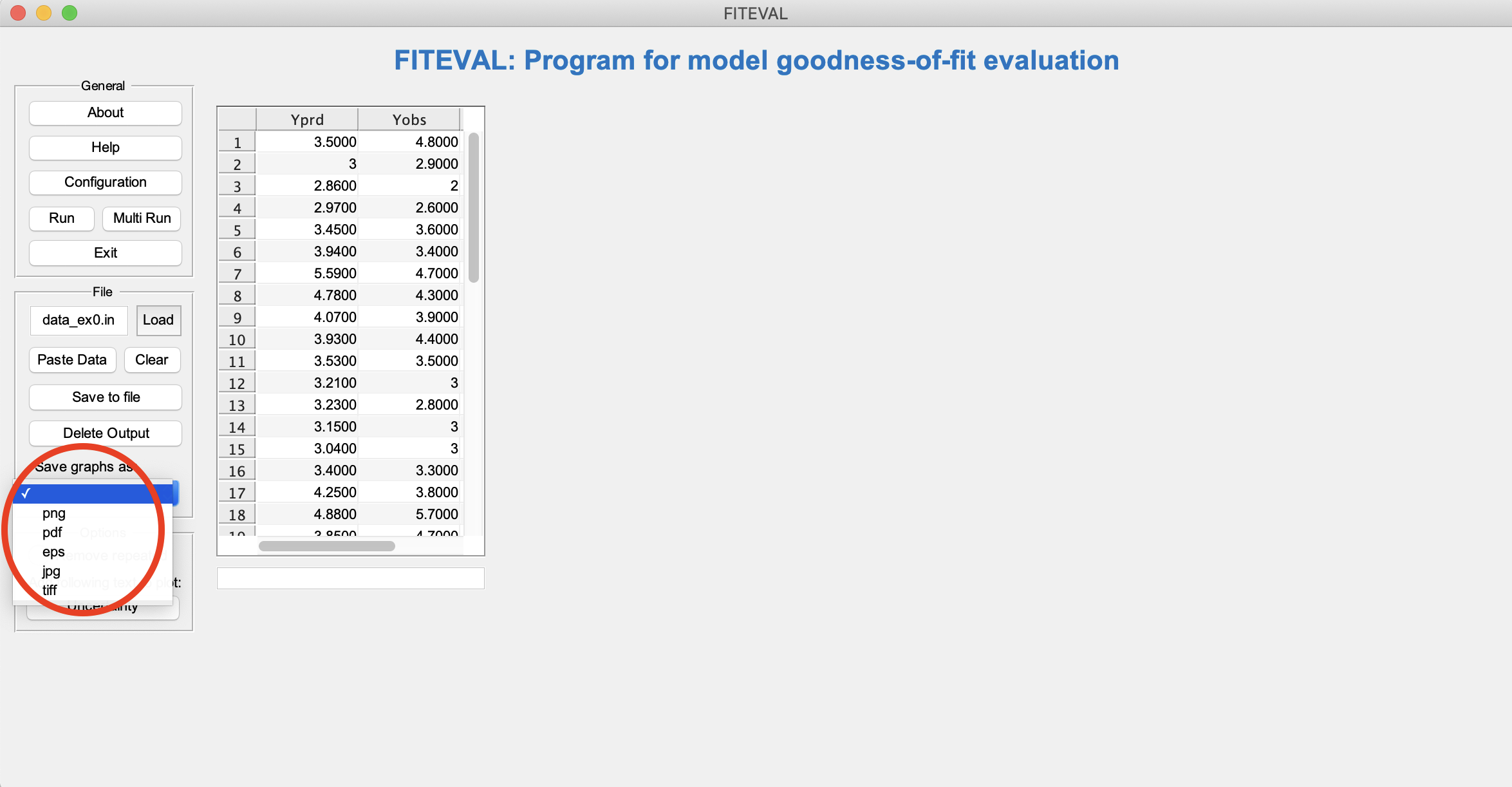

The individual plots can be obtained also as separated files in the specified (as argument) graphic format ('eps', 'pdf', 'jpg', 'tiff, or 'png'). For this, select the file type from the GUI menu or leave blank for no separate figures as shown below,

Removing repeated input paired values is possible by selecting that Option at the bottom of the GUI on the left. In this case, the program output indicates the number of removals only if repeated paired values are present. Additional details are provided below in the section "Checking the effect of repeated values"

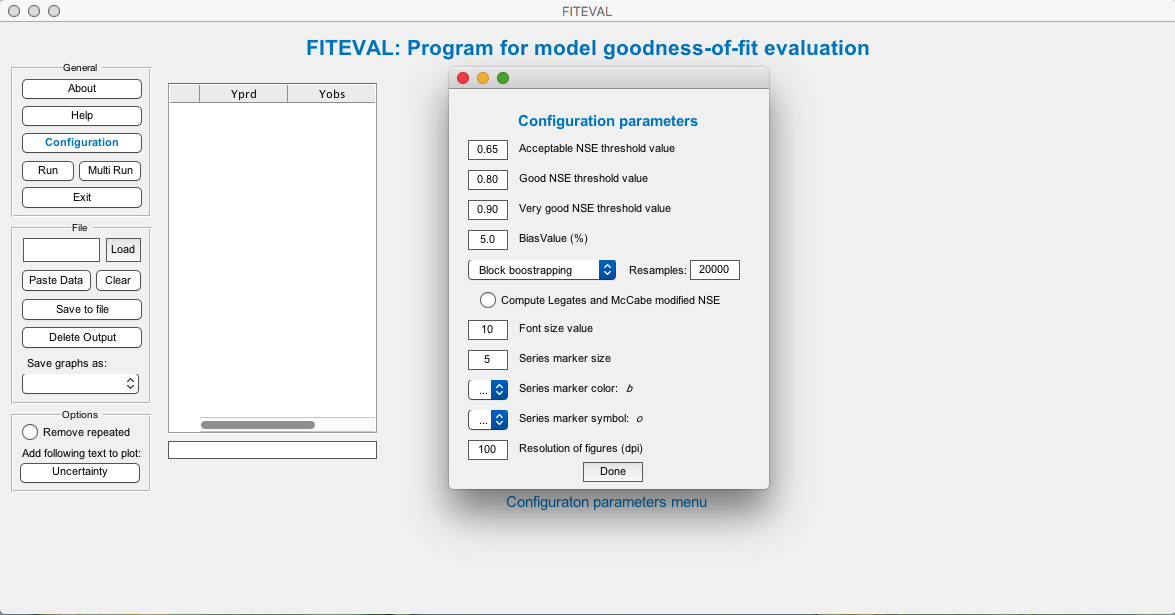

Configuration options

Several configuration options are offered to accommodate running FITEVAL with other threshold values, calculating Legates and McCabe (1999) modified NSE, and others. To access the options, select "Configuration" on the left of the GUI menu as shown below. Notice that this will open automatically the first time that the program is open, too.

The options available include: NSE thresholds for Acceptable, Good, Very good model "pedigrees" (with default values of 0.65, 0.80, 0.90, Ritter and Muñoz-Carpena, 2013); relative bias threshold value (%); bootstrapping options for the original Efron and Tibshirani (1993) or Politis and Romano (1994) block bootstrap when dealing with time series or autocorrelated data; the number of bootstrapping samples (a value of 20000 is recommended for robustness); the option for computing E1, the graphs’ font size, markers' symbol type and colours, and the resolution of the figures saved (default: 100 dots per inch). To find different combinations of markers and colors that can be used in MatLab, please visit http://www.mathworks.com/help/matlab/ref/linespec.html for full description of options. For example, "o,b" in the screenshot above indicates "blue circle" markers.

Running FITEVAL GUI without consideration of uncertainty

Running a simple case

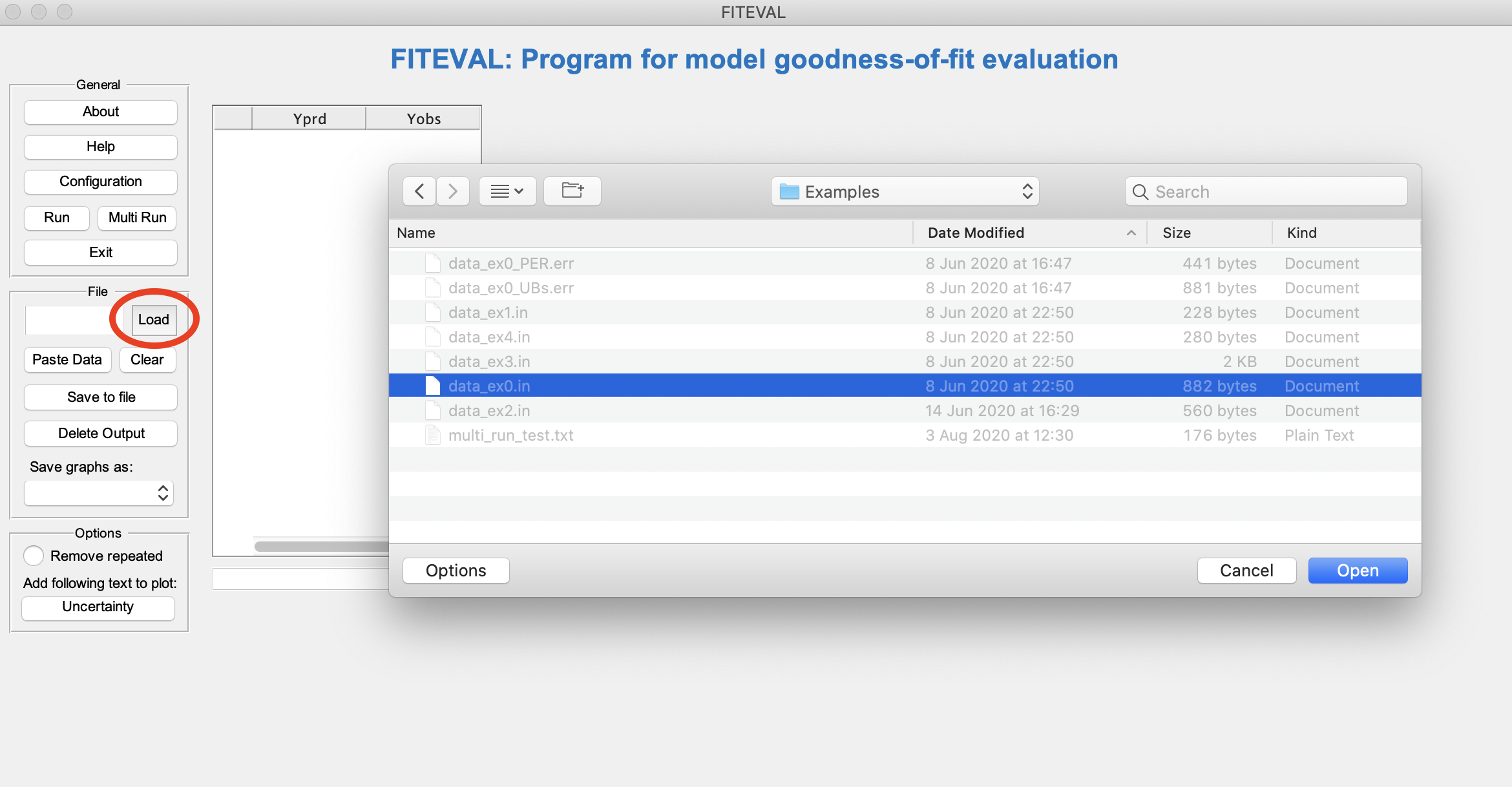

The {obs_i, pred_i} dataset can be input into the application from an existing input file or by cuting and pasting from another application. Several example input files are included in the ZIP installation package (Examples directory). The program input file must be written in ASCII or text format (be sure to select this option when saving the file with the editor of your choice). We recommend that the ASCII input files have an extension .in, but the program can use any other extension. To start the model evaluation from an existing file, click on "Load" on the options on the left hand side of the GUI and a file selection panel will open. Select an input file and click "Open". If the file is present in the working directory, it can be also loaded by typing its name in the corresponding field (on the left of “Load” button) and pressing "Enter". For example, we will open "data_ex0.in" in the image below,

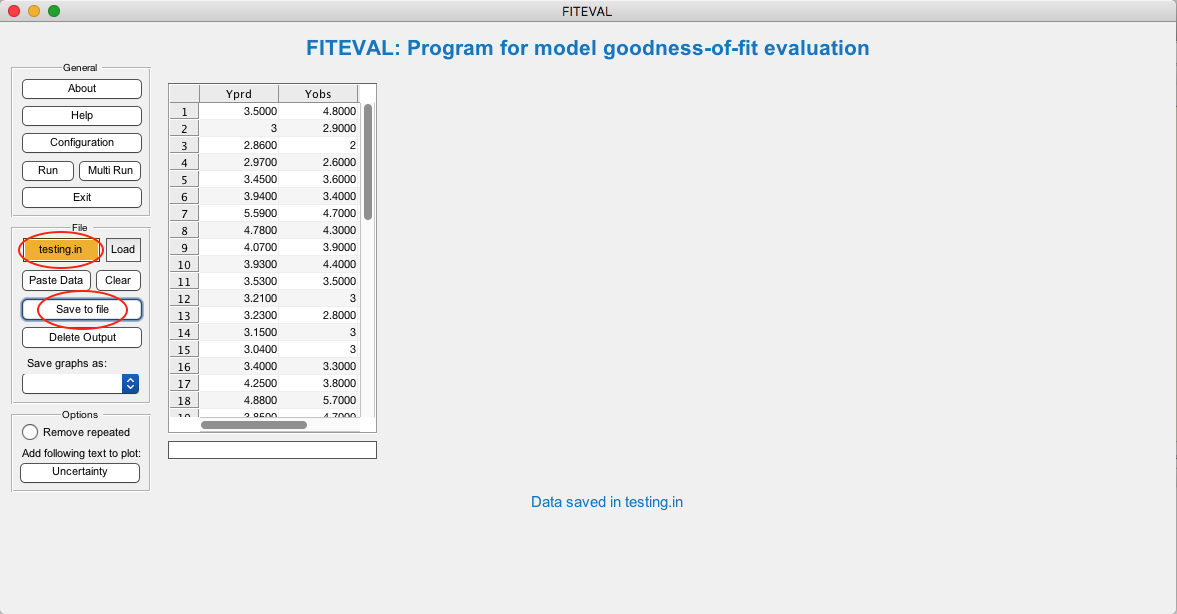

After loading the file the contents will appear in the GUI (Ypred and Yobs columns) so that the user can check that the correct data are imported.

Note that the data can also be selected from another application without opening a file by copying the data in the clipboard first from the other application, and then clicking "Paste data" in the FITEVAL GUI. The pasted data can be saved into a file by providing the new file name (with file extension .in) in the "File" field and pressing "Save to file" in the GUI menu as shown below,

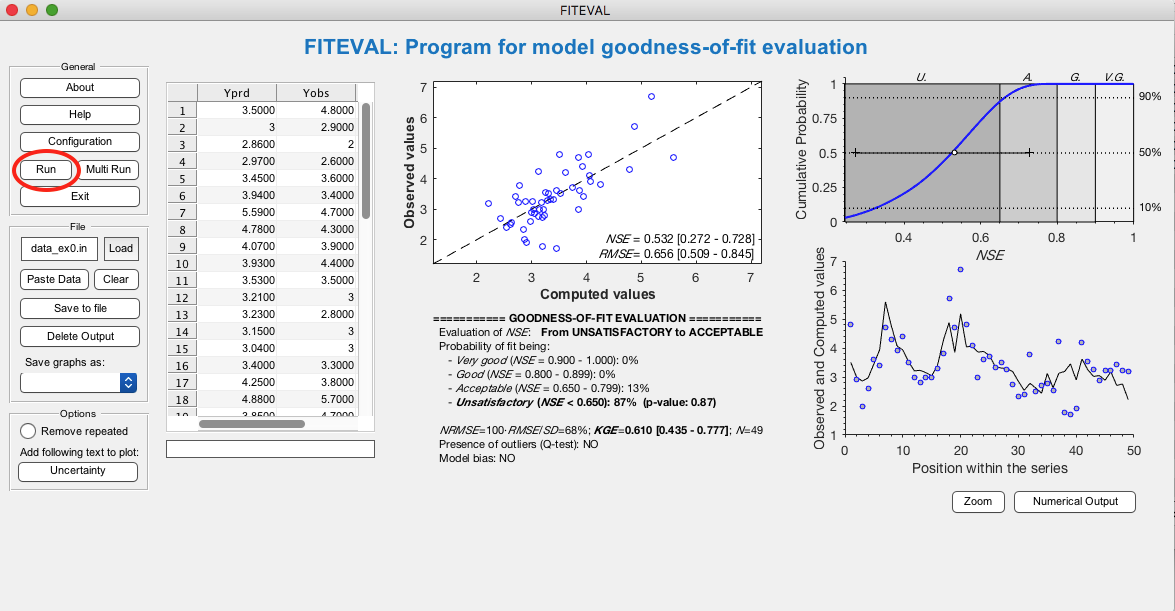

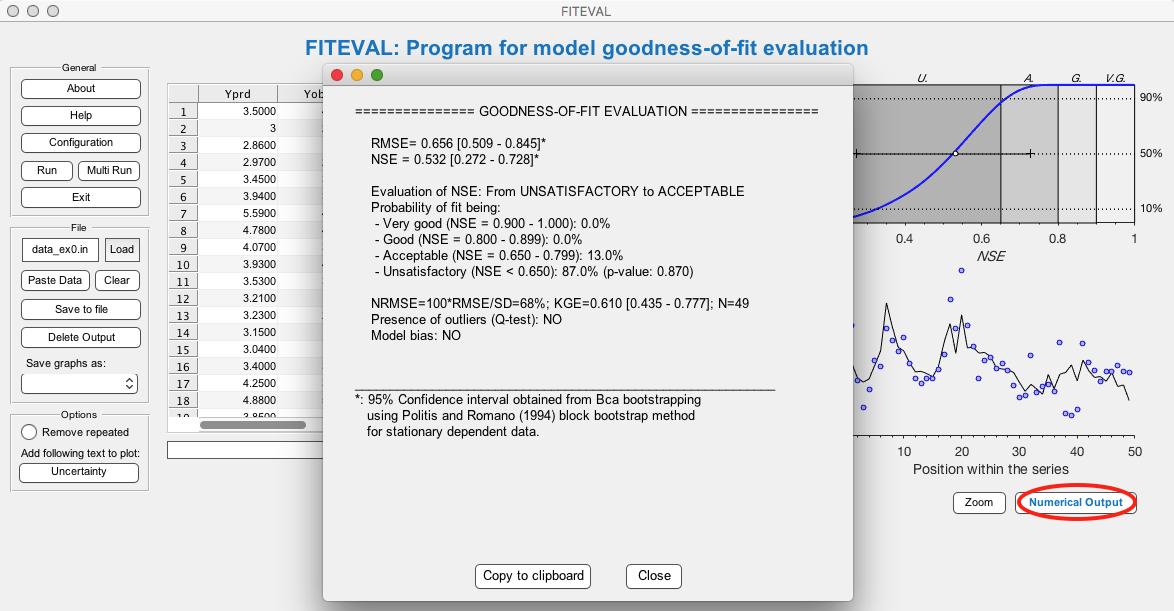

Once the data is loaded the model evaluation is run by pressing "Run" in the left menu. If needed, old output files for the loaded file can be deleted automatically by pressing "Delete Output" before running. Note that while running (and other program operations) a message in blue appears on the bottom of the GUI to inform the user on what action is being taken. After the program finishes, the ouput results (described above) are presented as shown below,

The corresponding pdf file generated automatically in this run is also shown below (located in the same directory as the input file),

The numerical output from the model evaluation can be opened by pressing on "Numerical Output" as shown in the image below. The text output can be copied also into the clipboard and pasted as text into other applications as needed. Similarly, the graphical output file can also be opened by pressing "Zoom" in the GUI and copied and pasted in other applications.

Outliers and model bias

Outliers (Dixon's test) and bias (with threshold set in the configuration file above) are automatically checked when running the program to guide the user about other potential sources of differences between observed and simulated values. Information for both tests is included in the graphical (filled symbols and annotated coordinates) and text outputs. An example is provided below for the file "data_ex2.in" (included in Examples directory with the installer distribution package),

Note that the specific outlier coordinates are provided also in the numerical output window and in the output text file found in the working directory.

Checking the effect of repeated values

For data series that move between or around certaing thresholds, often data values at the thresholds can be repeated several times. If the model has been calibrated to those thresholds, the repeted values can provide an unfair bias to the model assessment.

For example, in the graphs below, the model evaluation results for the example file "data_ex3.in" are presented. The series graph shows that the bserved and simulated values move between an upper and lower threshold most of the time. By selecting the FITEVAL GUI option "Remove repeated" as presented above, the program will evaluate this potential effect. The results of the model evaluation with and without repeated values are presented below for comparison.

a) Results with all data (without removing repeated values),

b) Results with repeated data removed (24 data pairs were removed),

Running FITEVAL GUI accounting for observations and model uncertainty

Following Ritter and Muñoz-Carpena (2020) Several options are provided in FITEVAL to consider uncertainty in observations and/or model outputs based on Harmel and Smith (2007) and Harmel et al. (2010), and extensions by the FITEVAL authors. The uncertainty options are presented in the GUI when selecting "Uncertainty" in the general program options in the left of the GUI. The options are summarized in the help file (UnOptions_help_mr.pdf).

As shown in the image below, after selecting "Uncertainty" the user has to select the following uncertainty options before running the case: uncertainty method (PER with common value or individual errors, or Correction factor CF), type of uncertainty (observed data, model, data and model), and when CF is selected the type and statistics of the error distribution function. PER refers to the estimation of measurement error by progation of errors (as the square root of the sum of individual squared errors in the measurement chain). Other estimators of measurement errors can be obtained from repeated values of the measurement at each instance. Please see below.

IMPORTANT NOTE:

- When running uncertainty cases the program will run twice, the first time with uncertainty and the second without uncertainty. Please compare both sets of results (PDF files generated in working directory, with and without "U" in file name).

A) Running FITEVAL GUI accounting for uncertainty of observed data

To include uncertainty in observations when evaluating the model, the user must click "Uncertainty" in the general program menu (bottom left) and select the proper uncertainty options in the menu that unfolds at the bottom of the GUI. Two types of observation errors can be assigned: general and individual data errors.

General observation error

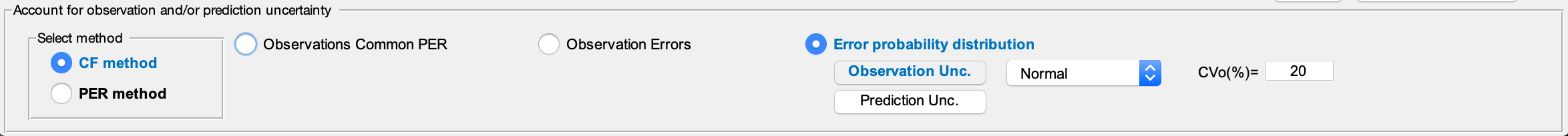

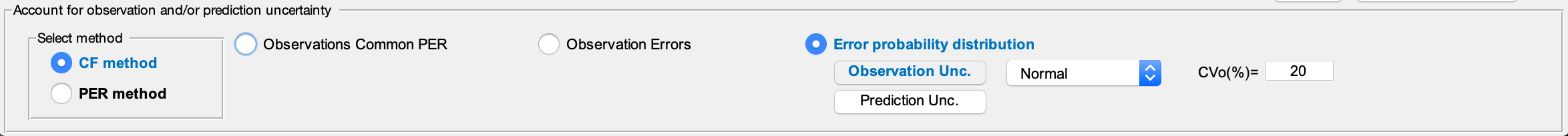

In this case general bounds (based on PER or CF) or a user-selected error distribution are assigned to all observations in the ".in" file. For example, if the user desires to specify the CF method with a Normal distribution for observed data error with CV(%)=20, the user would select the options (corresponding to option 3 and case 9 in UnOptions_help_mr.pdf). shown below,

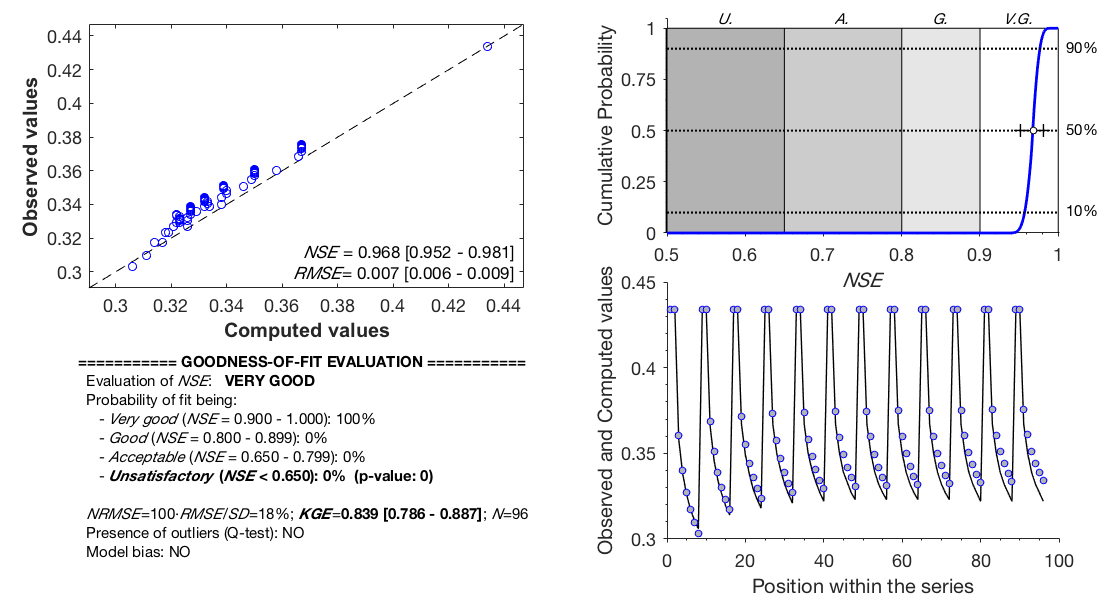

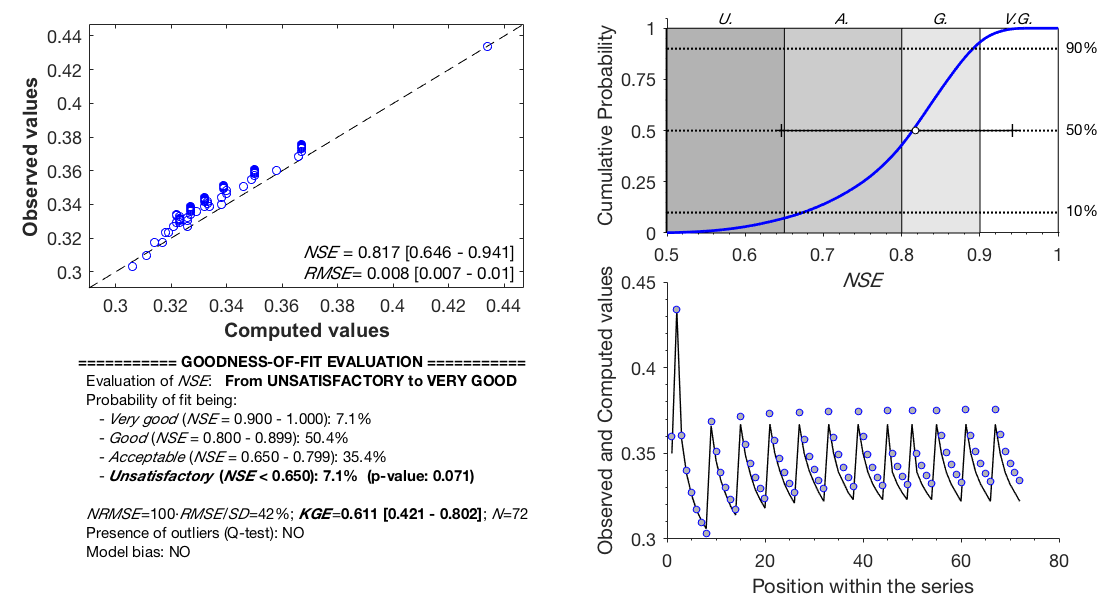

Note that FITEVAL computes the correction factors (CFi) and the uncertainty bounds automatically based on the selected probability distribution and saves the results in the working directory with the original filename and a "U" added at the end (with file extensions .txt, .pdf, png). In addition, to assess the effect of uncertainty in the model evaluation results, FITEVAL also computes automatically the base case without uncertainty options for the same input data and writes the results with the same filename as the original input with the corresponding file extensions. For example, evaluating the model with the input file "data_ex0.in" with the options above will produce the following result ("data_ex0U.pdf"),

compared to base case ("data_ex0.pdf"),

The user can also compare both sets of results in the GUI by selecting the "Fig. No Unc." option as shown below,

Although the recommended method for including uncertainty in the model evaluation is CF based on its robust statistical underpinning and plausibility (Ritter and Muñoz-Carpena, 2020), FITEVAL GUI contains other methods to include observation errors in the model evaluation, including a single probable error range (PER) for all data points, individual PER for each data point, or individual asymetric PER bounds. These can be selected in the Uncertainty options menu on the bottom of the screen.

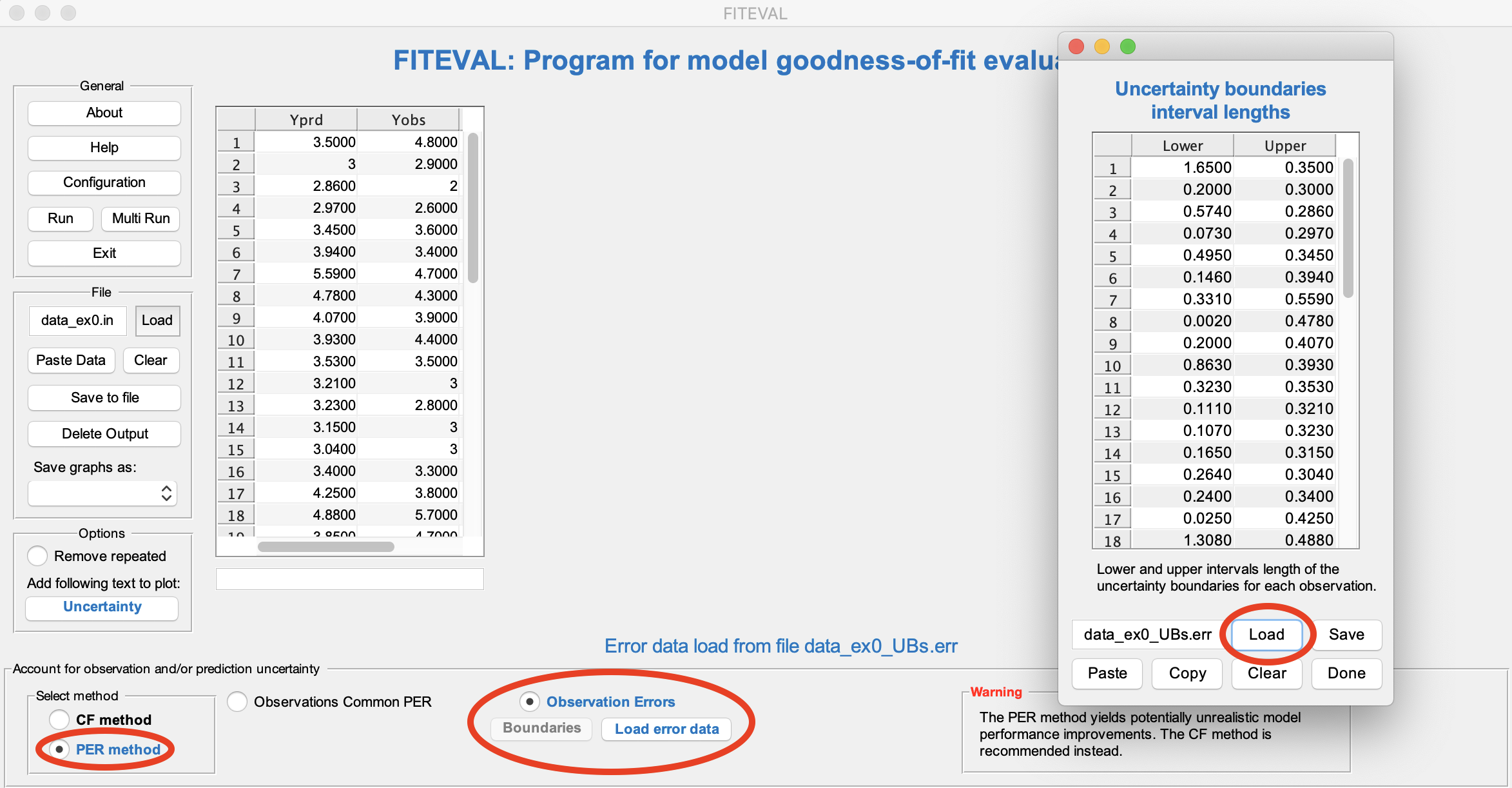

Individual observation errors

When the user selects the individual "Observation errors" option, an extension of the PER method is applied where the user can provide a series of individual errors for each data point. In this case, the user must provide an ASCII file with extension ".err" with the same number of lines as the corresponding “.in” file for the evaluation case. There are two options controlled automatically by the “.err” file contents:

- Single column: This is interpreted as a symmetric error case, where each individual error is given as a fraction of the observed value (e.g. PERi /100%).

- Two columns: This is intended for directly providing the error value in the same units as the observed variable (e.g. Oi ·PERi /100%). In this case, the “.err” file must contain two columns corresponding to the left (lower) and right (upper) bounds around each observed values used in the “.in” file. This option allows for considering asymmetric errors, but also symmetric errors (when the two columns are identical).

In addition, when each Oi represents a measure of central tendency (like the mean or the median) of a set of repeated measurements Oi,j (with j=1 …Nj), each error value can be given as the standard error (=standard deviation of the set Oi,1 … Oi,j… Oi,Nj divided by √Nj) or the standard error of the median. A robust non-parametric method for calculating the latter (i.e., one that does not rely on the assumption of normality) is as the standard deviation of a set of medians obtained by bootstrapping the repeated measurements. This involves, firstly, repeatedly sampling with replacement Nk times from Oi,1 … Oi,j… Oi,Nj computing the corresponding median value for each resample (medk). Secondly, the standard deviation of the set of medk is calculated.

An example is shown below for the case of % error asymmetric bounds with the model data file "data_ex0.in" and the user supplied error series "data_ex0_UBs.err", both found in the "Examples" directory. Note that the user could add manually the individual % error bounds (or PER if that option is selected) directly in the GUI but the number of error values must match that of the original data.

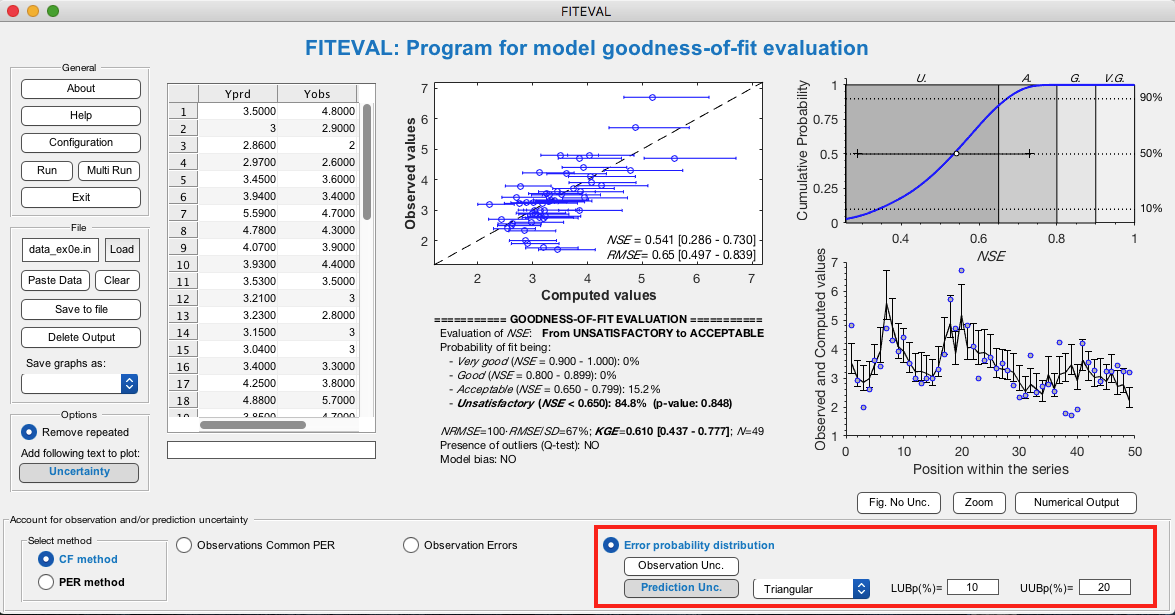

B) Running FITEVAL GUI accounting for uncertainty of model results

Model errors like those generated from a Monte-Carlo uncertainty analysis, can be also included in the model evaluation based on the CF method when selecting the "Correction factor" and "Prediction Unc." options. For example, for the input file "data_ex0.in" and assuming a common error distribution for model predictions described by a triangular distribution with bounds between +10% and -20% from the predicted value, we obtain,

If individual errors are desired for each predicted point, the companion FITEVAL command line MatLab executable function (fiteval.p) can be used (see FITEVAL MatLab documentation here).

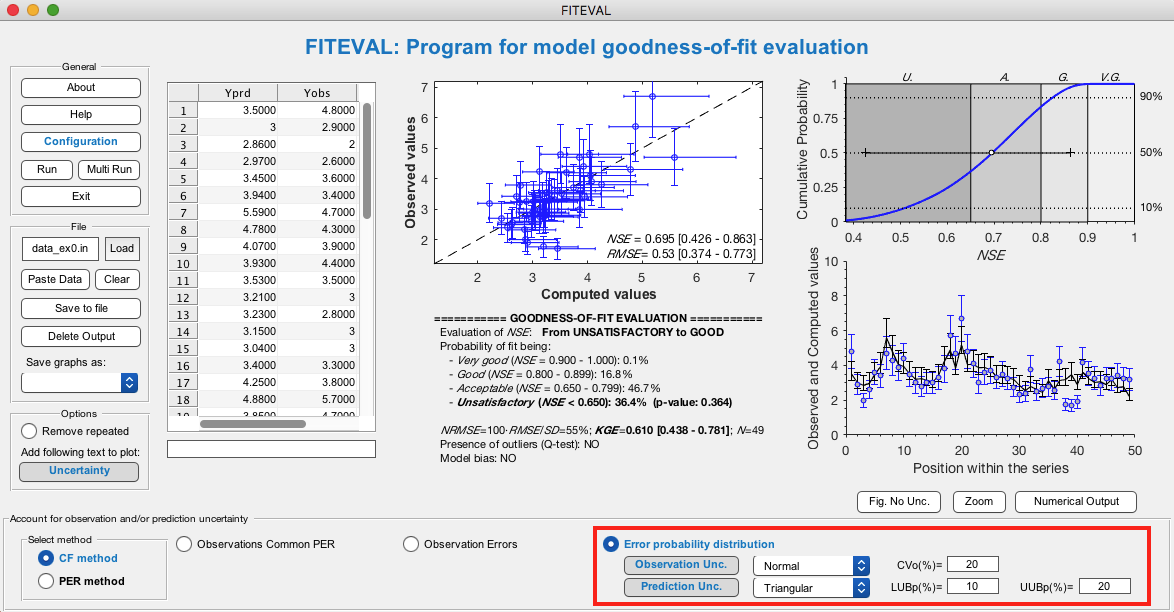

C) Running FITEVAL GUI accounting for both uncertainty of observed data and model results

Model and observations uncertainties can be also considered together in the evaluation based on the CF method. In this case, both "Observation Unc." and "Predition Unc." options are selected and distributions assigned for each source of uncertainty. For example, for the input file "data_ex0.in" assuming a common error distribution for observations described by a Normal distribution with CV=20%, and for prediction errors described by a triangular distribution with bounds between +10% and -20% from the predicted values, we obtain,

To use these same options in multi-run batch the corresponding line should be written as: data_ex0.in; 3 11 oN 20 pT 10 20. As before, if individual model errors are desired for each predicted and observed points, the companion FITEVAL command line MatLab executable function (fiteval.p) can be used (see FITEVAL MatLab documentation here)

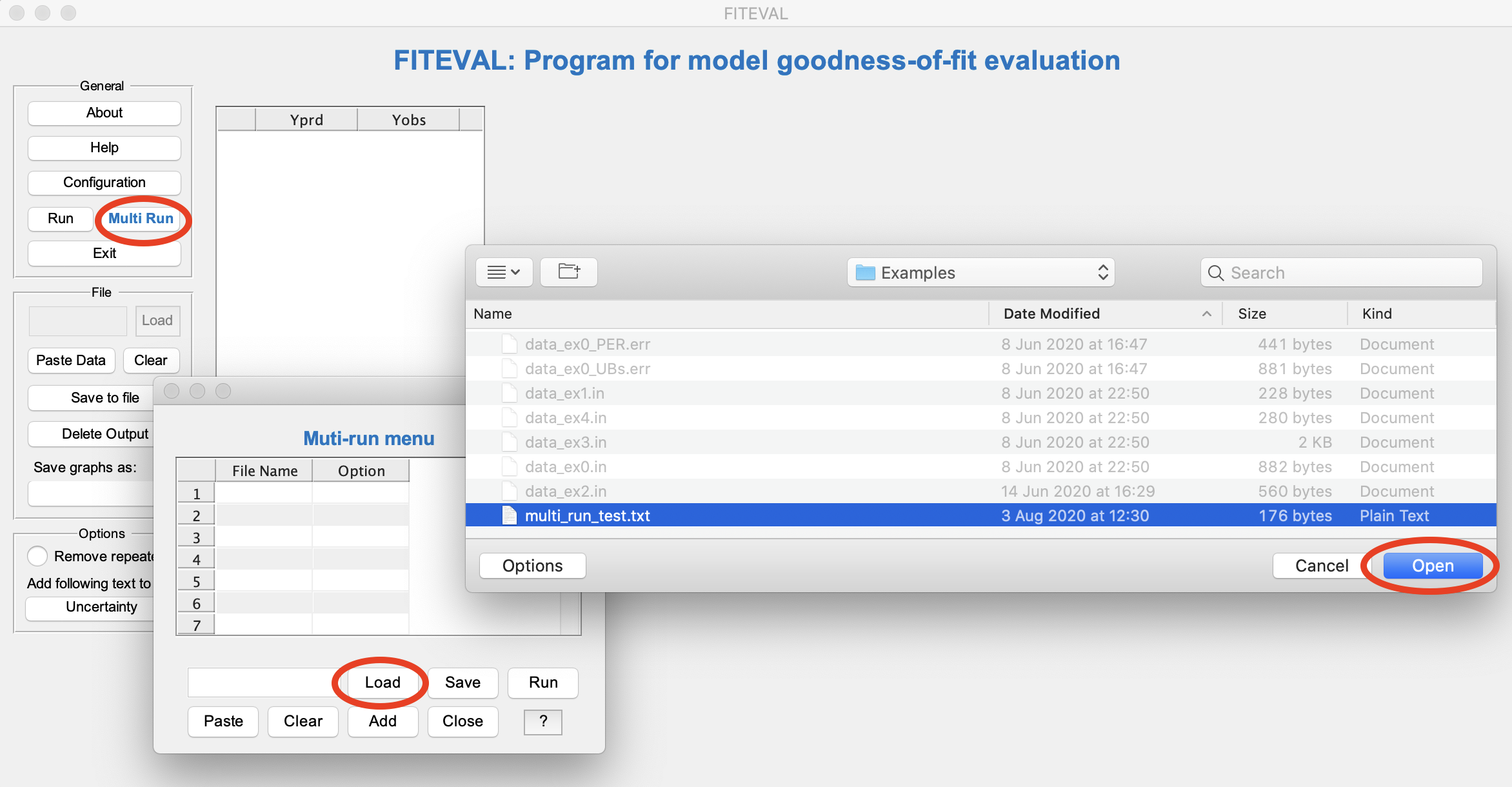

Batch processing of data files

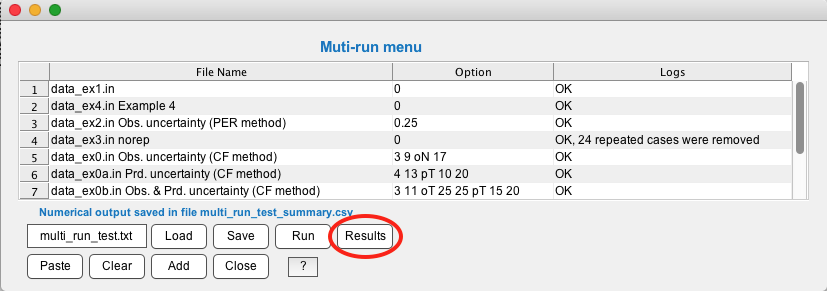

The FITEVAL GUI includes a simple but complete batch system for executing several model evaluations automatically. An ASCII text file can be created and loaded with the multi-run information, or this can be prepared within the GUI. To access this feature, select "Multi Run" in the FITEVAL GUI and "Load" the example included in the "Examples' directory "multi_run_test.txt" as shown in the illustration below,

The multi-run script can be prepared also within the GUI by ading new lines when pressing "Add" in the menu and filling in the corresponding information as described above. The script can be saved into an ASCII text file by writing the new file name (with file extension .txt) in the text field where "multi-run.txt" (default) appears, and selecting "Save". All lines can also be cleared to start a new batch as needed by pressing "Clear". Any line can be deleted by leaving it blank, except for the first one.

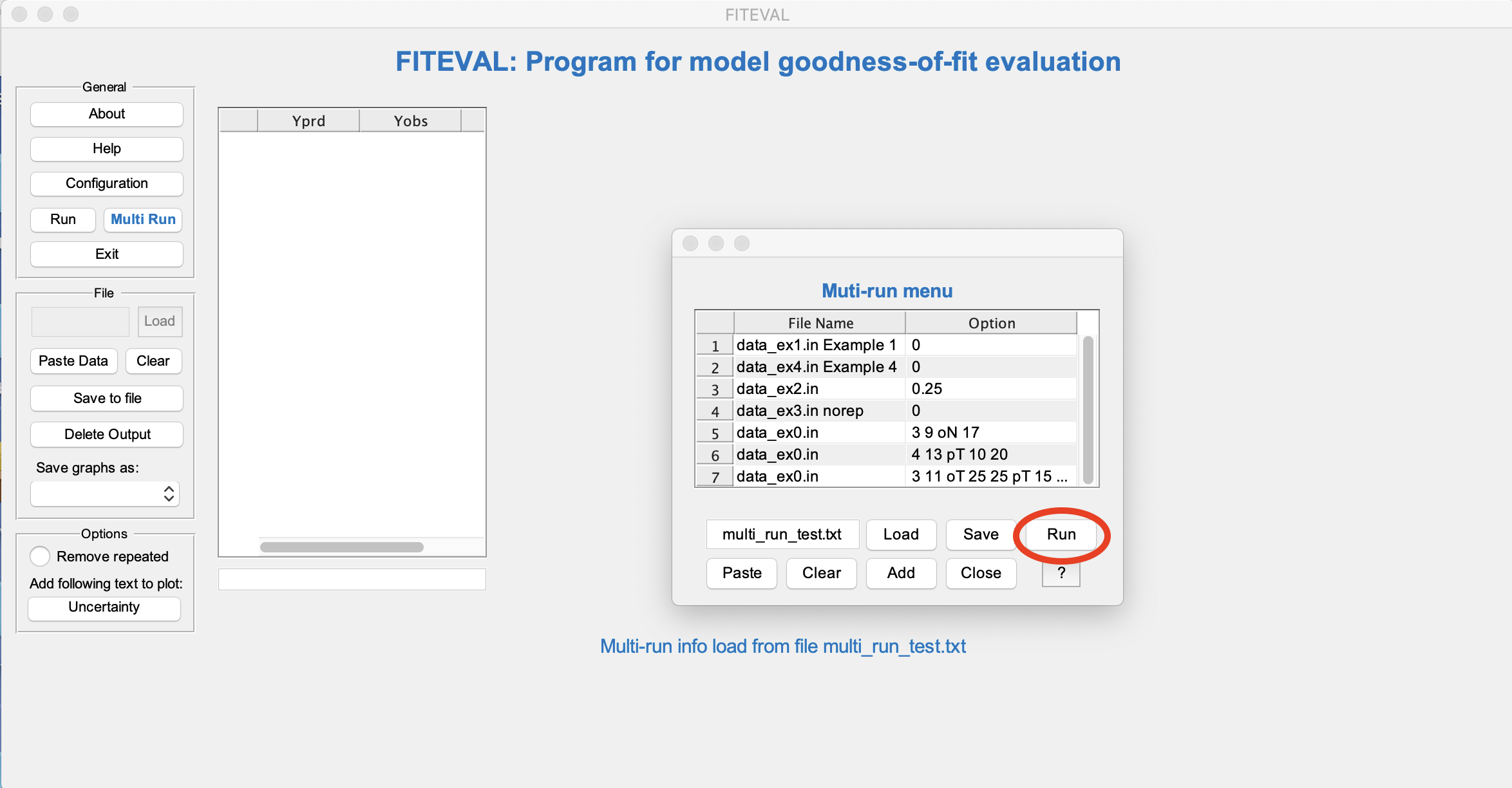

Once the batch file is loaded, multiple evaluations can be performed automatically by selecting "Run" in the pane as shown below. The different model evaluations will be run and shown in the GUI as they progress until the last one is completed. The blue information messages in the bottom of the GUI will show the current evaluation and the runs will be completed when the multi-run panel appears on the screen again.

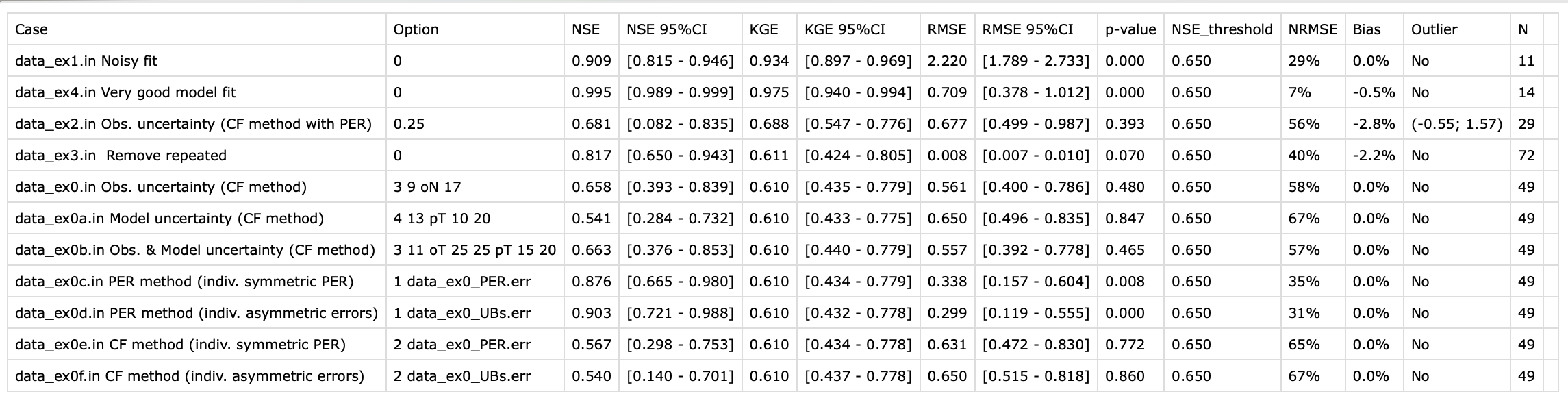

Briefly, the contents and options of the example multi-run file ("multi_run_test.txt") shown on the last image above are explained below. Note that the uncertainty options will be presented in the next section (see also help file for details, UnOptions_help_mr.pdf). Note also that the text after the input file name will be used as a label for the graphs and summary Table (except for the case of norep where the label will be read after that).

data_ex1.in Noisy fit; 0 %use data_ex1.in with no uncertainty options ("0") for a noisy model fit

data_ex4.in Good model fit; 0 %use data_ex4.in with no uncertainty options ("0") for a good model fit

data_ex2.in Obs. uncertainty (CF method with PER); 0.25 %use data_ex2.in with PER=0.25 observed data uncertainty using the CF method

data_ex3.in norep Remove repeated; 0 %use data_ex3.in removing repeated values (norep)

data_ex0.in Obs. uncertainty (CF method); 3 9 oN 17 %use data_ex0.in with CF method for obs. data uncertainty with Normal error distribution and CV%=17

data_ex0a.in Model uncertainty (CF method); 4 13 pT 10 20 %use data_ex0a.in with CF method for model uncertainty with Triangular error distribution

data_ex0b.in Obs. & Model uncertainty (CF method); 3 11 oT 25 25 pT 15 20 %use data_ex0b.in with CF method for data and model uncertainty with Triangular error distributions for both

data_ex0c.in PER method (indiv. symmetric PER);1 data_ex0c_PER.err %use data_ex0b.in with PER method for obs. data uncertainty with individual symmetric error values for each for each observation. Symmetric errors are given in the .err file with 1 column, i.e. a probable error range (0>PER>1) for each observed value

data_ex0d.in PER method (indiv. asymmetric errors); 1 data_ex0d_UBs.err %use data_ex0c.in with PER method for obs. data uncertainty with individual asymmetric error values for each for each observation. Assymmetric errors are given in the .err file with 2 columns as the lower and upper interval length for each observed value.

data_ex0e.in CF method (indiv. symmetric PER); 2 data_ex0_PER.err %use data_ex0e.in with CF method for obs. data uncertainty with individual symmetric error values for each for each observation

data_ex0f.in CF method (indiv. asymmetric errors); 2 data_ex0_UBs.err %use data_ex0f.in with CF method for obs. data uncertainty with individual asymmetric error values for each for each observation

Note that there is a direct correspondance between the GUI selection and the multi-run case. For example, as presented above, if the user desires to specify in the GUI the CF method with a Normal distribution for observed data error with CV(%)=20 shown below,

the corresponding line for these same options in the multi-run batch file should be written as: data_ex0.in; 3 9 oN 20.

After completing the multi-run evaluations, FITEVAL will compile a summary Table in CSV format with all the statistics that will be saved with the name of the multi-run file (ending in _summary.csv) saved on the same directory.

The table can be open easily in Excel or ASCII text editor, and can also be viewed by clicking on the a new buttom "Results" that appears in the multi-run panel after the evaluations are completed as shown below. The name of the new CSV Table summary is also shown is blue letters in the panel. Please note that the CSV numeric format follows decimal point as dot "." and not commas "," as in some european standards. Similarly commas “,” are used to separate values, while in some european standards the semicolon “;” is used. In these cases, the “Find and replace" function of any ASCII text editor can be used to replace comma by semicolon and point by comma. Thereby, the file can be imported on Excel directly.

Library Requirements

If MatLab is already installed in your computer no additional libraries are needed to execute this program. If you currently do not have MatLab 9.1 (R2016) or above installed on your computer, the FITEVAL GUI installer will install the MatLab Compiler Runtime environment (MCR) as part of the package setup. Notice that you will need to have user rights to install the program (user member of the Administrator group for the machine). Contact your system administrator as needed. MCRInstall is a freely-distributable self-extracting utility. If you are running Windows 10 you will not typically need .NET2.0 (.NET is installed by default). The installer might also ask to install C++ run time environment and some additional tools. Please accept all default prompts to complete the installation. You might need to reboot the machine for the MCR to be available for use.

Program License

This program is distributed as Freeware/Public Domain under the terms of GNU-License. If the program is found useful the authors ask that acknowledgment is given to its use and to the journal publications behind the work (Ritter and Muñoz-Carpena, 2013, 2020) in any resulting publication and that the authors are notified. The source code is available from the authors upon request:

- Dr. Axel Ritter

Profesor Titular

Ingeniería Agroforestal

Universidad de La Laguna

Ctra. Geneto, 2; 38200 La Laguna (Spain)

Phone: +34 922 318 548

http://aritter.webs.ull.es/

- Dr. Rafael Muñoz-Carpena

Professor, Hydrology & Water Quality

Department of Agricultural & Biological Engineering

University of Florida

P.O. Box 110570

287 Frazier Rogers Hall

Gainesville, FL 32611-0570 (USA)

carpena@ufl.edu

© Copyright 2012 Axel Ritter & Rafael Muñoz-Carpena

References

- Harmel, R.D., Smith, P.K., 2007. Consideration of measurement uncertainty in the evaluation of goodness-of-fit in hydrologic and water quality modeling. J. Hydrol. 337, 326–336.doi: 10.1016/j.jhydrol.2007.01.043

- Harmel, R.D., Smith, P.K., Migliaccio, K.W., 2010. Modifying goodness-of-fit indicators to incorporate both measurement and model uncertainty in model calibration and validation. Trans. ASABE 53:55–63. doi: 10.13031/2013.29502

- Harmel, R.D., P.K. Smith, K.W. Migliaccio, I. Chaubey, K.R. Douglas-Mankin, B. Benham, S. Shukla, R. Muñoz-Carpena, B.J. Robson. 2014. Evaluating, interpreting, and communicating performance of hydrologic/water quality models considering intended use: A review and recommendations. Env. Mod. & Soft. 57:40-51. doi:10.1016/j.envsoft.2014.02.013

- Ritter, A. and R. Muñoz-Carpena. 2020. Integrated effects of data and model uncertainties on the objective evaluation of model goodness-of-fit with statistical significance testing (under review).

- Ritter, A. and R. Muñoz-Carpena. 2013. Predictive ability of hydrological models: objective assessment of goodness-of-fit with statistical significance. J. of Hydrology 480(1):33-45. doi:10.1016/j.jhydrol.2012.12.004

This page was last updated on January 06, 2026.